For more than two decades, robotics market commentaries have predicted

a shift

, particularly in manufacturing, from traditional industrial manipulators to a new generation of mobile, sensing robots, called “cobots.” Cobots are agile assistants that use internal sensors and AI processing to operate tools or manipulate components in a shared workspace, while maintaining safety.

It hasn’t happened. Companies have successfully

deployed cobots

, but the rate of adoption is lagging behind

expectations.

According to the International Federation of Robotics (IFR), cobots sold in 2019 made up

just 3%

of the total industrial robots installed. A report published by Statista

projects that in 2022, cobots’ market share will advance to 8.5%. This is a fraction of a February 2018 study cited by the Robotic Industries Association

that forecasted by 2025, 34% of the new robots being sold in the U.S. will be cobots.

To see a cobot in action, here’s the

Kuka LBR iiwa

. To ensure safe operation, cobots come with built-in constraints, like limited strength and speed. Those limitations have also limited their adoption.

As cobots’ market share languishes, standard industrial robots are being retrofitted with computer vision technology, allowing for collaborative work combining the speed and strength of industrial robots with the problem-solving skills and finesse of humans.

This article will document the declining interest in cobots, the reasons for it and the technology that is replacing it. We report on two firms developing computer vision technology for standard robots and describe how developments in 3D vision and so-called “robots as a service” (yes, RaaS) are defining this faster-growing second generation of robots that can work alongside humans.

The shift to collaborative robots means the rise of robotics as a service

What are robotics sensing platforms?

There’s potential in humans and robots working side by side. In advanced manufacturing processes, there are tasks that require a robot’s speed and power, and tasks that require a human touch. On the assembly line of an automotive plant, for instance, industrial robots are used to move the cars along the assembly line. But a human expert’s touch is still required to fasten many of the bolts or do some of the welding. The question is, how to let robots do the heavy lifting while keeping humans safe?

Also called “control systems,” robotics sensing platforms are a combination of external sensors and software for monitoring robots and humans while operating inside controlled areas called work cells. These platforms are distinct from cobots, in that they integrate with robots that do not have internal sensors, as cobots do.

The essential technologies in this niche of robotics are real-time motion planning (RTMP) and speed and separation monitoring (SSM). RTMP is the general tracking of all objects in a robotic work cell, including the robots, materials and human operators. SSM is the specific tracking of robots and human operators. Achieving safety certifications for RTMP and SSM systems is critical to the growth of companies developing robotics sensing platforms.

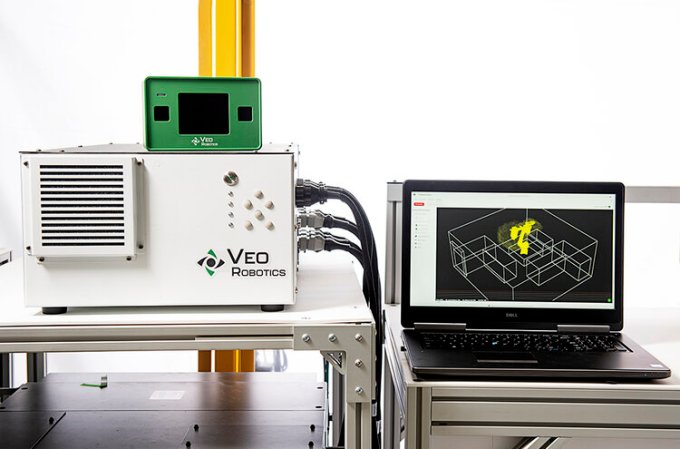

Veo Robotics

sells a software and hardware platform called FreeMove that uses 3D vision sensing to plan and monitor the activity of industrial robots, enabling safe operation with humans in a shared space.

Image Credits:

Veo Robotics (opens in a new window)

CEO Patrick Sobalvarro says the platform helps manufacturers become more flexible and responsive: “Say you are a dishwasher manufacturer and you learn that the motor component in a certain model has defects and has to be substituted. Using even a slightly different part, when robots are involved in the assembly line, could mean a lot of time (and money) spent retooling, calibrating and doing risk assessments.” With a feature-rich platform for robotic control, manufacturers can employ “advanced manufacturing” strategies and be more adaptable to changes like this.

Veo Robotics made a developer kit available for the FreeMove platform

in June 2019

, and has partnered with robot manufacturers, such as Kuka, Fanuc, ABB and Yaskawa, to conduct trials using the technology in the automotive, consumer packaged goods and household appliance industries. Veo Robotics has also been working with certification agencies to have the technology’s safety verified. According to the company, FreeMove will be generally available in early 2021.

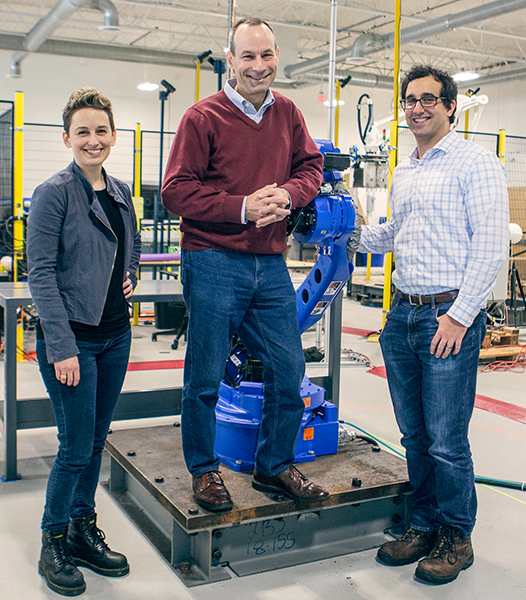

Veo Robotics founders: CTO Clara Vu, CEO Patrick Sobalvarro and VP Engineering Scott Denenberg. **Image Credits: **Veo Robotics

These safety certifications ensure that the platform complies with standards set by organizations such as ISO or the American National Standards Institute (ANSI). For a robotics sensing platform, this distinction is the “holy grail,” said Peter Howard, CEO of

Realtime Robotics

. Based in Boston, Realtime is also building a 3D vision platform for robotic automation. In responses by email, Howard wrote that he expects safety certifications to unlock “massive” potential for his company’s niche. “Robots will be able to be deployed in markets and applications that were previously prohibitive due to safety and resulting cost/complexity,” he wrote.

How do robotics sensing platforms enable standard robots to collaborate with humans?

Realtime Robotics offers a hardware and software-based solution that manufacturing engineers can use to run simulations in a virtual environment to optimize movements, configure robots in a work cell and actively intervene in real environments to avoid collisions with humans.

The simulation program uses a “hardware-in-the-loop” approach, which takes into account all the physical elements of a manufacturing environment. “By utilizing a hardware-in-the-loop approach and offering robotics cells the ability to instantly plan and replan paths based on any and all dynamic obstacles,” Howard said, “manufacturers will have the ability to safely and affordably deploy industrial robots with human operators in the loop. This will be an inflection point for robotics, enabling the automation of broad new applications.”

Realtime Robotics recently announced a partnership with Siemens Digital Industries Software to simplify the integration of Realtime Robotic’s hardware with industrial robots that do not have dedicated vision systems. The result of this collaboration, Howard said, is a major reduction in the “time to deploy and adapt to modifications, both during simulation and on the shop floor.” In recent projects with automotive OEMs, Howard said this collaboration “has enabled an 80%+ reduction in programming time and cost.”

Why use 3D vision?

These two companies are focusing on vision systems, as opposed to other types of sensors. University of Illinois Urbana-Champaign associate professor Kris Hauser, director of the Intelligent Motion Lab, a research group focused on human-robot interaction, said that advances in computer vision algorithms support this choice. These algorithms “nowadays give decent performance off-the-shelf for a variety of detection, tracking and 3D shape estimation tasks,” Hauser wrote in responses by email. He noted limitations to 3D vision, however: “[3D vision systems] have a hard time recognizing dark, transparent, and reflective objects, and certain technologies suffer in outdoor lighting conditions.”

These concerns are negligible in a setting like a factory floor, where lighting can be easily controlled. Installing and integrating a 3D vision system with existing industrial robots can be straightforward and quick. According to Sobalvarro, Veo Robotics’ FreeMove platform can be installed in as little as one day, and pre-trained computer vision algorithms can be used to rapidly develop new processes.

“Reducing the complexity, time and cost of deploying robotic work cells and production lines is a primary value-add for Realtime Robotics,” CEO Peter Howard said. “Our technology simplifies and accelerates the entire deployment process from digital twin creation (a model of a robotic arm that is used to plan movements for a real arm on an assembly line) and commissioning to physical layout and build-out. Our Realtime Controller automates multirobot path planning and eliminates the need to enter way-points and manage interference zones.” The product development road map includes optimization of work-cell configuration and sequence, he said.

Image Credits:

Realtime Robotics (opens in a new window)

Veo and Realtime are both taking a robot-agnostic approach, developing platforms that can integrate with novel cobots or with the much larger installed base of “sightless” industrial machines from companies like

Kuka

, Mistubishi

, ABB

, Kawasaki

and Universal Robots

.

What is robots as a service?

In addition to safety certifications, the use of robots as a service (RaaS) could help accelerate adoption of 3D vision systems. Large robot manufacturers such as those named above have established initiatives to expand access to these industrial machines, using this model for renting robots. The impact for small- to medium-sized manufacturers is not necessarily to reduce cost, but to reduce the risk of capital investment.

Robotics sensing platforms also reduce risk for these manufacturers, allowing robots to be used in processes where the cost of retooling when changing or adding new robotic processes would have previously prevented adoption of robotic manufacturing.

The factory floor’s near future

Could cobots eventually realize their promise, replacing the fleets of robots used on assembly lines that have no dedicated sensing systems? That remains unclear, but it is not likely to happen this decade, due to the constraints on force and speed covered in this article.

Instead, the current generation of cobots and the iterations that follow will be used for less-demanding tasks, both in and outside of the factory. Cobots can be effective for tasks like warehouse picking, security surveillance, cleaning floors or sanitizing areas with UV light.

For assembly line tasks in the coming decade, manufacturers will still be relying on the speed and power of traditional robots. Sensing platforms provide a “hive brain” for these robots that are otherwise blind. That will enable high-powered and high-speed robots to enter more industries and manufacturing settings, where previously they would have been cost-prohibitive and constrained by safety risks and the cost of retooling.

Once these robotic sensing platforms have completed safety certifications, manufacturers can start to remove the physical barriers that are currently separating employees and robots, realizing some of the vision that inspired a generation of cobots. With the barriers gone, there will be more effective and direct human-and-robot collaboration on assembly lines, leading to overall productivity gains and more rapid product innovation.

Where are all the robots?

简体中文

简体中文