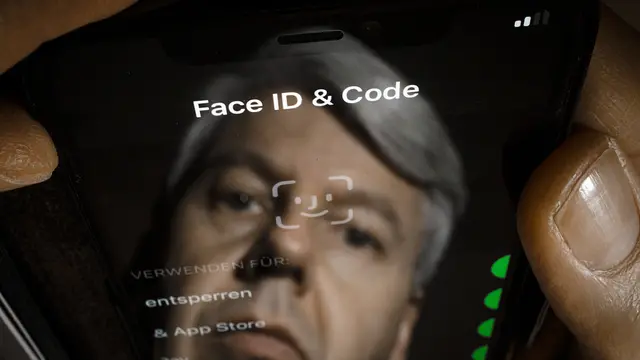

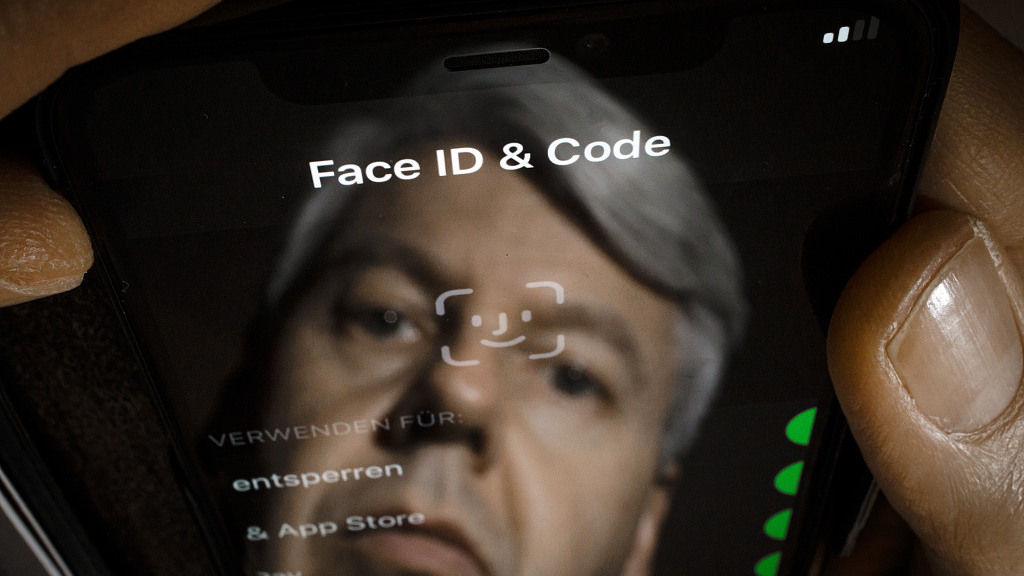

A man is reflected in a smartphone with the face ID lettering on it in Berlin, Germany, January 09, 2020. /CFP

IBM is getting out of the facial recognition business, saying it's concerned about how the technology can be used for mass surveillance and racial profiling.

Ongoing protests responding to the death of George Floyd have sparked a broader reckoning over racial injustice and a closer look at the use of police technology to track demonstrators and monitor American neighborhoods.

IBM is one of several big tech firms that had earlier sought to improve the accuracy of their face-scanning software after research found racial and gender disparities. But its new CEO is now questioning whether it should be used by police at all.

"We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies," wrote CEO Arvind Krishna in a letter sent Monday to U.S. lawmakers.

IBM's decision to stop building and selling facial recognition software is unlikely to affect its bottom line, since the tech giant is increasingly focused on cloud computing while an array of lesser-known firms have cornered the market for government facial recognition contracts.

"But the symbolic nature of this is important," said Mutale Nkonde, a research fellow at Harvard and Stanford universities who directs the nonprofit AI For the People.

Nkonde said IBM shutting down a business "under the guise of advancing anti-racist business practices" shows that it can be done and makes it "socially unacceptable for companies who tweet Black Lives Matter to do so while contracting with the police."

Bias in AI techs

The practice of using a form of artificial intelligence (AI) to identify individuals in photo databases or video feeds has come under heightened scrutiny after researchers found racial and gender disparities in systems built by companies including IBM, Microsoft and Amazon.

A study carried out by Massachusetts Institute of Technology in 2019 found that none of the facial recognition tools from the three companies were 100 percent accurate when it came to recognizing men and women with dark skin.

And it's far less accurate at identifying people of color, especially African-Americans and Asia races, another study conducted by the U.S. National Institute of Standards and Technology suggested.

(With inputs from AP)

简体中文

简体中文