Imagine if you could flip a switch onFacebook,and turn all the conservative viewpoints that you see liberal, or vice versa. You’d realize your news might look nothing like your neighbor’s.

See for yourself: Using data from Facebook, The Wall Street Journalmade an online toolthat lets you explore two live streams of posts you likely wouldn’t see in the same Facebook account. One draws from publications where the majority of shared links fell into the “very conservative” category during Facebook’s study, while the other pulls from sources whose links, for the most part, aligned “very liberal.” The headline “American Toddlers Have Shot 23 People” sits right across the aisle from “VA Tries to Seize Disabled Vet’s Guns.”

You can get lost for hours studying these alternate realities. What I see is a missed opportunity for technology to break down walls during this particularly divided moment. With access to more information than ever online, how could other points of view be so alien?

One reason: Facebook’s home page News Feed is run by a personalization algorithm that feeds you information it thinks you want to see. It’s a machine tuned to promote sunset selfies and live cat videos, not foster political discourse. Why not add an opposing-viewpoints button that gives me the power to see a headline from another side?

Sure, the other side can be really annoying—especially if you not only disagree with a post but also know it is incorrect. But I’m not the only one getting restless about our shrinking perspectives. President Barack Obama recently expressed dismay about a world where Democrats and Republicans alike live in echo chambers and “just listen to people who already agree with them.” Conservative commentator Glenn Beck this week shared similar feelings, directing his frustration at the social network: “Facebook truly is the only communal experience we now have in some ways. We need to see what ‘the other side’ is talking about.”

Around the globe, Facebook members spend 50 minutes a day on the social network and its sister services Messenger and Instagram. In America, more than 60% of members get political news from Facebook—and liberals are particularly tuned into it as a source,according to Pew Research Center. Facebook has courted serious news outlets, including the Journal,to publish “instant articles”directly into its streams.

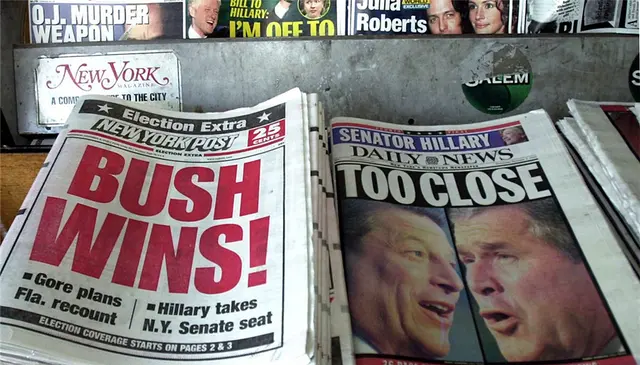

Facebook is replacing the old corner newsstand, where the covers of Forbes and Rolling Stone might sit inches apart. On Facebook, it isn’t easy to recreate that experience, or even tweak what shows up in your News Feed (though I have a few tips later in this column). Of course, Facebook isn’t to blame for ignorant commentators or closed minds—they’ve been around as long as there’s been news. But it can no longer shrug off accountability for its invisible hand on news.

Do we really want tech companies shaping our news? The truth is, they already do.

Facebook got into a heap of trouble last week following allegations that contractors who edit a “Trending” headlines boxsuppressed conservative news. (Facebook has denied this.) But a much bigger part of our Facebook experience is the News Feed, which wascreated by humans and carries its own biases. With a goal to get you to spend more time on Facebook, it uses 100,000 signals from you, your friends and the rest of the world to determine whether you want to read more or less about gun violence, or whether the Kardashians are more important than the situation in Kurdistan. And for such an important information gateway, we knowremarkably little about how it works.

Debate has swirled for years over whether personalization technology is actually closing us off to outside points of view and distorting reality bypromoting conspiracy theories. Liberal tech activist Eli Pariser dubbed it the “filter bubble.” The problem is difficult to quantify, but you can find variations of it all over: Google customizes search results, Amazon recommends what to read,Netflixdownplays search for its own personalized suggestions.

Facebook says the problem is us. Last year, theacademic journal Science published a peer-reviewed article by Facebook researchersthat showed that, on average, almost 29% of hard news that appears in the News Feed cuts across ideological lines. They concluded Facebook’s algorithm doesn’t create a filter bubble, but simply reflects one we make for ourselves. Other social scientistsdisagreed with both the methodology of the study and its conclusions, comparing it to the argument that“guns don’t kill people, people kill people.”

The echo chamber blame game may rage on, but the real issue is how technology can serve to broaden our views. That’s what inspired my Journal colleague Jon Keegan to create the “Blue Feed, Red Feed” tool.

His source was data Facebook’s researchers included with their 2015 report. With (anonymized) access to our behavior on the social network, they tracked and analyzed the news shared by 10.1 million U.S. users who had identified their political views on their profiles. The Facebook researchers mapped those news sources along the right and left wings based on who shared what.

“Blue Feed, Red Feed” is a near-live stream of posts from these sources—though, importantly, not a simulation of what a conservative or liberal person actually sees. (You won’t find the Journal or its biggest competitors on either feed, because their content was shared by users spread across more of the political spectrum, according to Facebook.Read more about the tool’s methodology here.)

The result is a product Facebook hasn’t made for its users: a feed tuned to highlight opposing views. Once you take a detour to the other side, your own news looks different—we aren’t just missing information, we’re missing what our neighbors are dwelling on. (“Blue Feed, Red Feed” will be updated with new topics throughout this election season, so you can keep using it.)

While you should never rely on Facebook as the sole source of your news, there are ways you can pop your filter bubble inside the network, too.

First, you have to understandhow political stories get into your News Feed. They come from two places: people you’ve “friended” writing a post or sharing a piece of content, and news sources whose Facebook pages you’ve “liked.” From those sources, Facebook’s algorithm presents posts in an order it thinks you’d like to see them.

To increase the range of sources you see, you could “like” more news and opinion outlets. Doing this diversified my News Feed only a little in the short term. If you go down this path, beware that liking a page (even ones contrary to your regular views) is an action that might be seen by your friends. (You can make your likes private in Settings.)

Facebook has taken a number of other small stepsto improve the News Feed. When you click on a post with an article, Facebook pops underneath it a box called “people also shared,” with related posts that might broaden your view. And as of a few months ago, you cando more than “like” a news story—you can even “sad” or “angry” it, which are particularly useful in a political season.

While you can’t exactly customize your News Feed, you can use the recently expanded News Feed preferences, found under Settings, to prioritize people or news sources you’d like to see first. There you’ll also find a “discover” tool where Facebook lists additional sources it thinks might match your interests. In my experience, though, Facebook’s suggestions hardly challenged my typical set of sources. (The discover tool also didn’t work on my Facebook phone app due to a bug.)

Last month, Facebooklaunched a video encouraging its members to “keep an open mind”during the U.S. elections by seeking out other points of view. It suggested we use its search box to look up news topics, which it presents from a wide range of sources. But Facebook search is one of its least intuitive products, and there’s no way to pin a topic to have it show up in our News Feed.

“Changing your mind isn’t the point. Being open to it is,” Facebook said. I couldn’t agree more—but as a product, Facebook makes it harder, not easier, for its members to do this.

“Blue Feed, Red Feed,” proves Facebook has the data and insight to present us viewpoints we might disagree with. If the Journal could make a tool to expose us to both sides, why couldn’t the world’s largest social network?

(THE WALL STREET JOURNAL)

简体中文

简体中文